Hi,

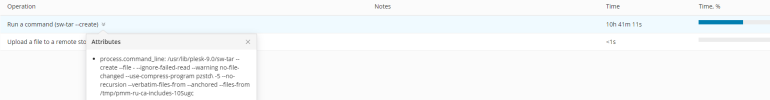

since 7 Days the Backups works extremly Slow (internal and to the external repository). What happens? Before Backups run in a few Minutes... now after 6 hours i got 30% of the Backup, we had this Problem at the beginning of Hosting.

Betriebssystem

Debian 9.13

Produkt

Plesk Obsidian

Version 18.0.41 Update #1, zuletzt aktualisiert: 22. Febr. 2022 22:14:34

since 7 Days the Backups works extremly Slow (internal and to the external repository). What happens? Before Backups run in a few Minutes... now after 6 hours i got 30% of the Backup, we had this Problem at the beginning of Hosting.

Betriebssystem

Debian 9.13

Produkt

Plesk Obsidian

Version 18.0.41 Update #1, zuletzt aktualisiert: 22. Febr. 2022 22:14:34