- Server operating system version

- Ubuntu 22.04

- Plesk version and microupdate number

- Plesk Obsidian Web Pro Edition Version 18.0.61 Update Nr. 5

Hi there,

I have a fresh server running Ubuntu 22.04 LTS (hetzner) - as we expect a lot traffic / database traffic, it's a pretty big machine. 192GB RAM, fast cpu, 6x NVMe disks in RAID 10.

When I tested the speed with

(I installed Alma before, the value was the same)

--> So, stable numbers, several tests, reboot - always around 1.7 GB/sec.

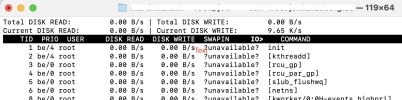

--> Now, after a clean Plesk install (via command line) - the speed is constantly down to 970 MB/sec --> a bit more than 50% from before. Nothing else has changed - rebooting etc. doesn't help. I've uninstalled most of the plesk extensions - but the number doesn't change.

RAID seems ok - /cat/mdstat shows:

Does anybody have seen anything similar - is there a tip, how to solve this?

I explicitly chose Raid 10 instead RAID 5 or 6 to have a maximum writing speed...

Thanks - BR

Martin

I have a fresh server running Ubuntu 22.04 LTS (hetzner) - as we expect a lot traffic / database traffic, it's a pretty big machine. 192GB RAM, fast cpu, 6x NVMe disks in RAID 10.

When I tested the speed with

it showed me 1,7 GB/sec --> nice.dd if=/dev/zero of=/root/testfile bs=50G count=5 oflag=direct

(I installed Alma before, the value was the same)

--> So, stable numbers, several tests, reboot - always around 1.7 GB/sec.

--> Now, after a clean Plesk install (via command line) - the speed is constantly down to 970 MB/sec --> a bit more than 50% from before. Nothing else has changed - rebooting etc. doesn't help. I've uninstalled most of the plesk extensions - but the number doesn't change.

RAID seems ok - /cat/mdstat shows:

root@.. ~ # cat /proc/mdstat

Personalities : [raid1] [raid10] [linear] [multipath] [raid0] [raid6] [raid5] [raid4]

md2 : active raid10 nvme1n1p4[0] nvme5n1p4[5] nvme0n1p4[1] nvme3n1p4[4] nvme4n1p4[3] nvme2n1p4[2]

5609204736 blocks super 1.2 512K chunks 2 near-copies [6/6] [UUUUUU]

bitmap: 10/42 pages [40KB], 65536KB chunk

md0 : active raid1 nvme1n1p2[0] nvme5n1p2[5] nvme0n1p2[1] nvme3n1p2[4] nvme4n1p2[3] nvme2n1p2[2]

4189184 blocks super 1.2 [6/6] [UUUUUU]

md1 : active raid1 nvme1n1p3[0] nvme5n1p3[5] nvme0n1p3[1] nvme3n1p3[4] nvme4n1p3[3] nvme2n1p3[2]

1046528 blocks super 1.2 [6/6] [UUUUUU]

unused devices: <none>

Does anybody have seen anything similar - is there a tip, how to solve this?

I explicitly chose Raid 10 instead RAID 5 or 6 to have a maximum writing speed...

Thanks - BR

Martin