Hi,

We are getting 504 whenever pm.max_children is reached, for the webite to be available again, we have to restart php service.

the reason for this error are google crawler, when reach pm.max_children set in php the site goes 504 or not availe, we have tried different numbers still getting the same issue.

My question is: how can we fix this? or may auto restart php whenever the site is 504, or limit google crawler consuming cpu?

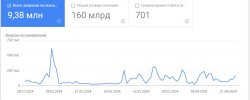

In attachement is our php setting and google crawler stat.

We are getting 504 whenever pm.max_children is reached, for the webite to be available again, we have to restart php service.

the reason for this error are google crawler, when reach pm.max_children set in php the site goes 504 or not availe, we have tried different numbers still getting the same issue.

My question is: how can we fix this? or may auto restart php whenever the site is 504, or limit google crawler consuming cpu?

In attachement is our php setting and google crawler stat.