LionKing

Regular Pleskian

- Server operating system version

- Ubuntu Linux

- Plesk version and microupdate number

- 18.048

Hi guys.

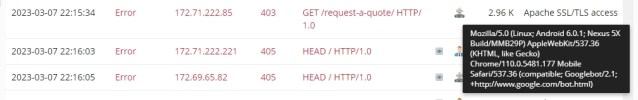

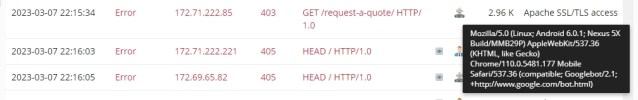

Ok so I been looking into this for some time and it seems that Plesk blocks traffic from Googlebot and other indexing bots requesting pages, (Probably also Bing which Yahoo/Duckduckgo also use):

Yandex too:

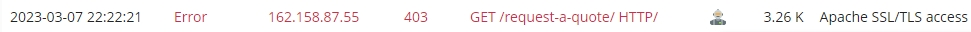

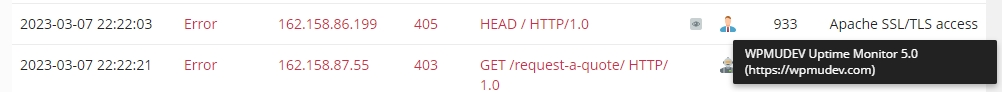

The same happens for the up-time monitor service we use from WPMU Dev:

Its is WordPress Multisite (Our corporate website) and when we ran the old server with C-panel this issue did not exist which leads me to think that Plesk would be the obvious culprit of the issue.

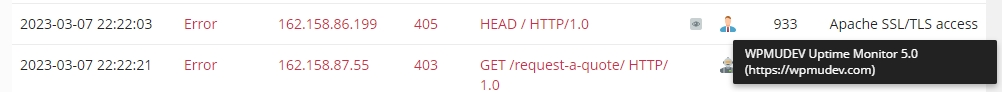

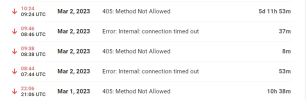

A quick sneak peak at WPMU Dev's up-time monitoring logs reveal this which might be clue (or not).

They unfortunately do not allow to change method, but it would probably not make a difference since we already know that legitimate indexing bots are also being blocked.

Any ideas?

Thanks in advance.

Kind regards

LionKing

Ok so I been looking into this for some time and it seems that Plesk blocks traffic from Googlebot and other indexing bots requesting pages, (Probably also Bing which Yahoo/Duckduckgo also use):

Yandex too:

The same happens for the up-time monitor service we use from WPMU Dev:

Its is WordPress Multisite (Our corporate website) and when we ran the old server with C-panel this issue did not exist which leads me to think that Plesk would be the obvious culprit of the issue.

A quick sneak peak at WPMU Dev's up-time monitoring logs reveal this which might be clue (or not).

They unfortunately do not allow to change method, but it would probably not make a difference since we already know that legitimate indexing bots are also being blocked.

Any ideas?

Thanks in advance.

Kind regards

LionKing