thinkjarvis

Basic Pleskian

- Server operating system version

- Ubuntu 24.04.3 LTS

- Plesk version and microupdate number

- 18.0.71 #2 build1800250729.09

Hi We are seeing this process on all of our plesk servers.

Each server hosts a mix of wordpress and wordpress woocommerce sites with an excellent degree of optimisation on them. 99% of the sites pass web vitals or are extremely close to passing due to design decisions or plugins used.

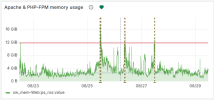

Screenshot above is for reference only just so you can see where we are seeing the issue.

Over a period of 24 - 72 hours

The ram usage and swap hit 100%

The ram is shown as rising to 90% system and approx 10% domains

We then get a spike in phpfpm - The client gets increased checkouts on their ecommerce store

Plesk cannot re-allocate the memory

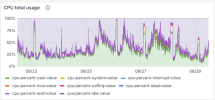

CPU still looks fine

MariaDB crashes

Upone restarting mariadb - The memory starts from scratch building again until this process repeats.

We have had to schedule a nightly cron to restart mariaDB to prevent this cycle crashing the server.

Can anyone give me suggestions as to how I can cap the ram "used by system" so that it manages itself and flushes things it shouldnt be keeping in ram?

Can anyone give me suggestions as to how I can stop mysql memory usage climbing uncontrolably?

Note the Innodb sizes are all set optimium. Changing these settings has made no difference to what we are seeing.

I will point out that the actual ram used value is well within tollerances and nowhere near capacity.

The server has 18cpu cores and 40gb of ram in an enterprise server setup.

The hosting provider is a managed host and has had multiple linux engineers looking at the problem but we are struggling to find a solution.

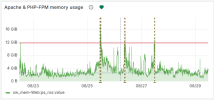

Last 7 days: each crash in memory is us restarting mariadb due to the websites becoming slow and then unresponsive

Each peak shown below is a crash which correlates perfectly with the mysql becoming too full.

The CPU also correlates with the mysql and phpfpm usage

Swap has been adjusted over time but you can see that our last crash a couple of days ago spiked the swap usage to maximum

The traffic figures do not directly correlate but the phpfpm spikes correlate with increased checkout activity in line with a marketing schedule.

Mysql is exceeding limits set in innodb_

Its not emptying itself

memory used by system hits 90% of that available

mariadb also eventually flags 90% of that available

everything crashes at peak load

The crashes only occur when mysql hits its critical point and swap fills itself

Is there a setting I am missing specifically that should purge mysql?

A timeout value?

Note we have ran mysqltuner and further tweaked those settings.

Tried high and low values and still not seeing the memory use stabalising just a gradual upward line.

Each server hosts a mix of wordpress and wordpress woocommerce sites with an excellent degree of optimisation on them. 99% of the sites pass web vitals or are extremely close to passing due to design decisions or plugins used.

Screenshot above is for reference only just so you can see where we are seeing the issue.

Over a period of 24 - 72 hours

The ram usage and swap hit 100%

The ram is shown as rising to 90% system and approx 10% domains

We then get a spike in phpfpm - The client gets increased checkouts on their ecommerce store

Plesk cannot re-allocate the memory

CPU still looks fine

MariaDB crashes

Upone restarting mariadb - The memory starts from scratch building again until this process repeats.

We have had to schedule a nightly cron to restart mariaDB to prevent this cycle crashing the server.

Can anyone give me suggestions as to how I can cap the ram "used by system" so that it manages itself and flushes things it shouldnt be keeping in ram?

Can anyone give me suggestions as to how I can stop mysql memory usage climbing uncontrolably?

Note the Innodb sizes are all set optimium. Changing these settings has made no difference to what we are seeing.

I will point out that the actual ram used value is well within tollerances and nowhere near capacity.

The server has 18cpu cores and 40gb of ram in an enterprise server setup.

The hosting provider is a managed host and has had multiple linux engineers looking at the problem but we are struggling to find a solution.

Last 7 days: each crash in memory is us restarting mariadb due to the websites becoming slow and then unresponsive

Each peak shown below is a crash which correlates perfectly with the mysql becoming too full.

The CPU also correlates with the mysql and phpfpm usage

Swap has been adjusted over time but you can see that our last crash a couple of days ago spiked the swap usage to maximum

The traffic figures do not directly correlate but the phpfpm spikes correlate with increased checkout activity in line with a marketing schedule.

Mysql is exceeding limits set in innodb_

Its not emptying itself

memory used by system hits 90% of that available

mariadb also eventually flags 90% of that available

everything crashes at peak load

The crashes only occur when mysql hits its critical point and swap fills itself

Is there a setting I am missing specifically that should purge mysql?

A timeout value?

Note we have ran mysqltuner and further tweaked those settings.

Tried high and low values and still not seeing the memory use stabalising just a gradual upward line.