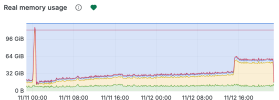

This may be true - But the server has 128gb of ram the daily script shouldnt have done that.

Does this coincide with a backup via the backup manager?

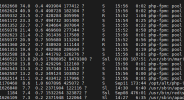

We have found that the backup manager when backing up databases consumes mysql/mariadb memory and doesnt release it. This can cause problems where the DB memory takes up too much space relative to php-fpm memory. The two then fight eachother which can cause the server to kill mysql/mariadb.

Solution: How did we fix our issues?

We took a multi-pronged approach. The goal being to reduce memory consumption

- We swapped to TCMALLOC for the db cache management which reduced usage significantly

- Reduced the INNODB buffer to a level more relevant to the max table sizes on the biggest website on the server.

- Manually optimised the database on the biggest site - Essentially we halved the size of the database due to redundant records in the clients wordpress installation.

- WP_Options had become a bloated mess over time. We also stripped and deleted other unused tables.

- This process was a double edged sword - Reducing the size of the DB, also reduced the time php-fpm requests take to complete - Bringing down the overally memory usage. By shrinking the Database - We also reduced the size of the open table cache and generla db caches managed by plesk.

- Changed the way http requests work on the site - Enabled script merging for some parts of the site which reduces http requests and also has a knock on effect on php-fpm

- Added REDIS to all sites on the server

- Enabled enhanced DOS in Imunify 360

- Enabled rate limiting in WordFence locally on all sites with a blocking policy

- We also use a DB optimisation stack which we purchased for about £5k on studio license - This reduces the time db and php-fpm actions take.

The result was a drop in mysql memory usage in a 24 hour period of 16gb of ram.

The nightly backup spikes for us have become smaller because the DB is half the size it was. Therefore less alocated ram to backup the DBs.

I hope this helps.

HOWEVER a spike like that could have been a random bot attack. Or an inefficient script being hit by too many real visitors at once.