- Server operating system version

- AlmaLinux 8.4 x86_64

- Plesk version and microupdate number

- Plesk Obsidian 18.0.41.1

I have two domains setup on plesk - let's say usersite.com and api.usersite.com. usersite.com is powered by nuxt.js - a front-end framework which runs on node.js. It makes API calls to api.usersite.com, which is a Laravel application powered by Octane (so it uses swoole instead of php-fpm). Both of these projects are running inside docker containers.

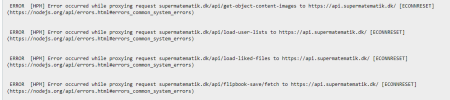

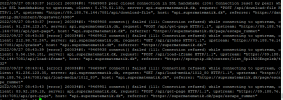

Now to the problem - when there is slightly higher traffic to usersite (200 users per minute) API site starts to drop connections, immediately resulting in 504. Perhaps someone could guide me in the right direction of why this might be happening? First I thought it was an issue of php-fpm that's why I've reworked my project and dockerized it to be served by Octane server instead of php-fpm. That doesn't seem to have helped, however. Worth mentioning that nginx is used as reverse proxy. So perhaps it's something to do with nginx limiting request count?

Now to the problem - when there is slightly higher traffic to usersite (200 users per minute) API site starts to drop connections, immediately resulting in 504. Perhaps someone could guide me in the right direction of why this might be happening? First I thought it was an issue of php-fpm that's why I've reworked my project and dockerized it to be served by Octane server instead of php-fpm. That doesn't seem to have helped, however. Worth mentioning that nginx is used as reverse proxy. So perhaps it's something to do with nginx limiting request count?