thank you very much for your post ! not 100% sure I fully understood the possible danger illustrated in this example though..

I get that some FTP clients will not support resuming, fair enough !

did you mean that there might be a situation, where an FTP client which does not support resuming, will result in spawning multiple instances of the same big file? and therefore cause disk space situation?

cheers

@realaaa@

I think that we have to avoid misunderstandings.

The FTP client is essentially the "source", connecting to a "target" - the FTP server.

To be precise, the "source" is actually a server upon which the FTP client resides - when referring to the "source", it is both the server and the FTP client.

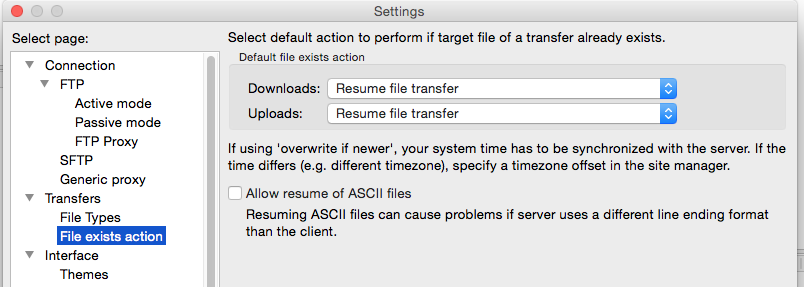

If the FTP client does not support file upload resumption, then no issues will arise, irregardless of the question whether the target supports resumption.

If the FTP client does support file upload resumption and the target does not, then no issues should occur.

Please note the usage of "should", because it is not a certainty - there are a lot of malcoded FTP clients out there and a small part of them does not properly deal with instructions from the FTP server (the target) that file upload resumption is not supported.

The "should" is an indication of the theory, but practice can differ.

If the FTP client (source) and the FTP server (target) are BOTH configured to allow for file upload resumption, then issues can occur.

My example was just a simple illustration of a very likely scenario that can and often will occur - failure to upload due to connectivity issues.

Connectivity issues can occur frequently - consider network maintenance in a datacenter and/or network switch failures and/or servers being down.

Even a reboot of a server and/or some Plesk related installation (or maintenance) activities can cause - temporary - connectivity issues.

The example is actually based upon the scenario where a source (the FTP client) is still alive, but the target (the FTP server) is not - temporarily or permanent.

The example is also based upon a scenario where a source will retry to re-establish connection with the target over and over again.

Sure, again it has to be stated that a lot of malcoded FTP clients are out there and the mere fact that a target (the FTP server) does not respond is apparently not enough for some FTP clients to stop the attempts to transfer files.

However, we are not focusing on the peculiar situation where the target does not respond at all - hence, not focusing on a permanent loss of connection.

In essence, we focus on the situation where a target is temporarily not able to connect and/or temporarily not able to persist the connection.

If the connection does not persist, then the resumption protocol will become "active" as soon as there is a hickup in the connection.

Let's assume that the hickup occurs at time t and that a backup has been made at time t-1.

The backup made at time t-1 has to be transferred to the target, but cannot be completed as a result of the hickup at time t.

Now suppose that at time t+1 the target is up again - in most cases, the resumption protocol will allow for the completion of the file transfer.

However, let's change the story a bit.

We still have a backup at time t-1, a hickup in the connection at time t ........ but now we also have a backup at time t+1, t+2, t+3 and t+4.

If and when the target gets up again at time t+5, then the resumption protocol will try to resume the file uploads for all backups - that is, the backups from t-1, t+1, t+2, t+3 and t+4.

The FTP protocol will optimize file transfer by using multiple simultaneous connections that span most of the bandwidth available.

As a result, if the individual backups are "large enough" then five (5) backups will consume almost all or even all of the bandwidth available.

Stated differently, file resumption can and will make the target (the FTP server) slow or even unresponsive when a lot of data has to be transferred.

In addition, whenever the target becomes less responsive, the source (the FTP client) is also becoming less responsive - the transfers to be resumed will be added to a queue that can grow bigger and bigger.

In short, in "perfect" conditions, a "perfect storm" can occur where both the source and the target can become very (very) unresponsive .... and even fail.

Is file upload resumption bad?

No, in theory it is not.

However, many relevant factors have been ignored in the example - again, it was just an example to illustrate.

The perfect conditions for the perfect storm are a (logical) result of factors like

1 - bandwidth : if there is sufficient bandwidth, then there is not very likely an issue ...... the presence of sufficient bandwidth can ascertain that resuming file uploads are completed fast enough, BEFORE other issues can arise,

2 - network policies : if there are network policies in place, then file upload resumption can cause spikes in traffic that are throttled or even prohibited ... in the absence of these types of network policies, then resuming file uploads can complete BEFORE other issues can arise,

3 - bandwidth throttling config on both the source and the target : if the bandwidth is throttled by (custom) config on both the source and the target, then it could PREVENT that bandwidth overusage occurs, but it is not a certainty .... HOWEVER, the concept of bandwidth throttling might just cause resumption of file uploads to cause issues similar to those issues that are present in the perfect storm : limiting bandwidth will slow down everything and can hence result in both source and target server becoming less responsive (and for a longer duration)

4 - availability of resources : the presence of sufficient disk space (and memory) can PREVENT that a perfect storm occurs .... after all, disk space is consumed temporarily for all those files that still have to be transferred, so if disk space is sufficient then problems are less likely to occur

5 - size per backup and frequency of backups : the number of potential issues will increase with the size per backup and the frequency of backups ...... if there are more backups to be resumed and each of the backups is relatively large, then those factors mentioned in point 1 to 4 can create a perfect storm!

6 - downtime : if a connection to the target does not persist for a longer period, then the likelihood will increase that more backups and more data wil have to be transferred by resumption, hence increasing the probability that the perfect storm occurs!

All of the above is a very non-exhaustive summary, but it gives you an idea of how everything can accumulate to a perfect storm.

And we did not even talk about the dynamic relation between issues on both the source and target server and/or the issues that might be the result of the backup protocol that is really resource hungry!

In practice, file upload resumption is not bad at all ..... but it can become bad very quickly.

In practice, one should always use some golden rules, like activating bandwidth throttling (if possible and necessary) and not running all backups at once.

After all, even when file upload resumption is disabled, the perfect storm can still be present ........ points 1 to 6 are often / always valid.

Stated differently, the "perfect storm" can always occur, but without file upload resumption it is a manageable breeze .... otherwise it is a hurricane!

In short, a simple way of putting it, it is recommended to leave file upload resumption disabled.

It is not about bad or good, but it is all about "manageability of issues" when those issues occur.

That is all I wanted to illustrate!

Kind regards....

talk.plesk.com

talk.plesk.com

talk.plesk.com

talk.plesk.com