- Server operating system version

- Debian 11.5

- Plesk version and microupdate number

- 18.0.47 u2

Hello,

I'm having an issue with passenger.log. It's filled every 5 sec with that kind of logs, even when no websites was using it:

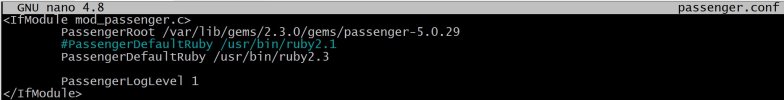

I've tried changing the PassengerLogLevel in /etc/apache2/mods-enabled/passenger.conf (which default to 5 (debug)) to warning level (2). But no improvements, still receiving tons of these logs...

Any idea on how to deal with this issue ?

I'm having an issue with passenger.log. It's filled every 5 sec with that kind of logs, even when no websites was using it:

[ D 2022-10-13 12:07:40.0001 3592350/T1g age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0002 3592350/T1g Ser/Server.h:691 ]: [ServerThr.23] Updating statistics

[ D 2022-10-13 12:07:40.0002 3592350/T3 age/Cor/App/Poo/AnalyticsCollection.cpp:116 ]: Analytics collection time...

[ D 2022-10-13 12:07:40.0003 3592350/T3 age/Cor/App/Poo/AnalyticsCollection.cpp:143 ]: Collecting process metrics

[ D 2022-10-13 12:07:40.0003 3592350/T1s age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0003 3592350/T3 age/Cor/App/Poo/AnalyticsCollection.cpp:151 ]: Collecting system metrics

[ D 2022-10-13 12:07:40.0003 3592350/T1c age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0003 3592350/T1r age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0004 3592350/T1s Ser/Server.h:691 ]: [ServerThr.29] Updating statistics

[ D 2022-10-13 12:07:40.0004 3592350/T1c Ser/Server.h:691 ]: [ServerThr.21] Updating statistics

[ D 2022-10-13 12:07:40.0005 3592350/T1r Ser/Server.h:691 ]: [ServerThr.28] Updating statistics

[ D 2022-10-13 12:07:40.0011 3592350/T3 age/Cor/App/Poo/AnalyticsCollection.cpp:67 ]: Analytics collection done; next analytics collection in 4.999 sec

[ D 2022-10-13 12:07:40.0040 3592350/Ti age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0041 3592350/T11 age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0041 3592350/T2c age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0041 3592350/T22 age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0041 3592350/Ti Ser/Server.h:691 ]: [ServerThr.6] Updating statistics

[ D 2022-10-13 12:07:40.0041 3592341/T5 Ser/Server.h:691 ]: [WatchdogApiServer] Updating statistics

[ D 2022-10-13 12:07:40.0043 3592350/Tu age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0041 3592350/T28 age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0042 3592350/T18 age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0041 3592350/T11 Ser/Server.h:691 ]: [ServerThr.15] Updating statistics

[ D 2022-10-13 12:07:40.0041 3592350/Tg age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0042 3592350/T1k age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0042 3592350/T2c Ser/Server.h:691 ]: [ServerThr.39] Updating statistics

[ D 2022-10-13 12:07:40.0042 3592350/T12 age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0042 3592350/T22 Ser/Server.h:691 ]: [ServerThr.34] Updating statistics

[ D 2022-10-13 12:07:40.0043 3592350/T26 age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0043 3592350/T1m age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0043 3592350/Tw age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0043 3592350/T2a age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0043 3592350/T24 age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0043 3592350/Tk age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0043 3592350/Te age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0043 3592350/T1w age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0043 3592350/T16 age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0043 3592350/T2e age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0043 3592350/Tt age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0043 3592350/T1y age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0043 3592350/T1a age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0044 3592350/Tu Ser/Server.h:691 ]: [ServerThr.12] Updating statistics

[ D 2022-10-13 12:07:40.0043 3592350/T1e age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0043 3592350/T2g Ser/Server.h:691 ]: [ApiServer] Updating statistics

[ D 2022-10-13 12:07:40.0043 3592350/Tz age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0043 3592350/Tr age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0044 3592350/T28 Ser/Server.h:691 ]: [ServerThr.37] Updating statistics

[ D 2022-10-13 12:07:40.0043 3592350/T1i age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0043 3592350/T14 age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0043 3592350/T1u age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0043 3592350/Tm age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0043 3592350/T21 age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0044 3592350/T18 Ser/Server.h:691 ]: [ServerThr.19] Updating statistics

[ D 2022-10-13 12:07:40.0045 3592350/Tg Ser/Server.h:691 ]: [ServerThr.5] Updating statistics

[ D 2022-10-13 12:07:40.0045 3592350/T1k Ser/Server.h:691 ]: [ServerThr.25] Updating statistics

[ D 2022-10-13 12:07:40.0045 3592350/T12 Ser/Server.h:691 ]: [ServerThr.16] Updating statistics

[ D 2022-10-13 12:07:40.0046 3592350/T1o age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0046 3592350/T26 Ser/Server.h:691 ]: [ServerThr.36] Updating statistics

[ D 2022-10-13 12:07:40.0046 3592350/T1m Ser/Server.h:691 ]: [ServerThr.26] Updating statistics

[ D 2022-10-13 12:07:40.0046 3592350/Tw Ser/Server.h:691 ]: [ServerThr.13] Updating statistics

[ D 2022-10-13 12:07:40.0047 3592350/T2a Ser/Server.h:691 ]: [ServerThr.38] Updating statistics

[ D 2022-10-13 12:07:40.0047 3592350/Tp age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0047 3592350/T24 Ser/Server.h:691 ]: [ServerThr.35] Updating statistics

[ D 2022-10-13 12:07:40.0047 3592350/Tk Ser/Server.h:691 ]: [ServerThr.7] Updating statistics

[ D 2022-10-13 12:07:40.0053 3592350/Tp Ser/Server.h:691 ]: [ServerThr.9] Updating statistics

[ D 2022-10-13 12:07:40.0047 3592350/Te Ser/Server.h:691 ]: [ServerThr.4] Updating statistics

[ D 2022-10-13 12:07:40.0048 3592350/T1w Ser/Server.h:691 ]: [ServerThr.31] Updating statistics

[ D 2022-10-13 12:07:40.0048 3592350/T16 Ser/Server.h:691 ]: [ServerThr.18] Updating statistics

[ D 2022-10-13 12:07:40.0048 3592350/T2e Ser/Server.h:691 ]: [ServerThr.40] Updating statistics

[ D 2022-10-13 12:07:40.0049 3592350/Tt Ser/Server.h:691 ]: [ServerThr.11] Updating statistics

[ D 2022-10-13 12:07:40.0049 3592350/Ta age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0049 3592350/Td age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0049 3592350/T1y Ser/Server.h:691 ]: [ServerThr.32] Updating statistics

[ D 2022-10-13 12:07:40.0049 3592350/T1a Ser/Server.h:691 ]: [ServerThr.20] Updating statistics

[ D 2022-10-13 12:07:40.0050 3592350/T1e Ser/Server.h:691 ]: [ServerThr.22] Updating statistics

[ D 2022-10-13 12:07:40.0050 3592350/T8 age/Cor/Con/TurboCaching.h:246 ]: Clearing turbocache

[ D 2022-10-13 12:07:40.0050 3592350/Tz Ser/Server.h:691 ]: [ServerThr.14] Updating statistics

[ D 2022-10-13 12:07:40.0051 3592350/Tr Ser/Server.h:691 ]: [ServerThr.10] Updating statistics

[ D 2022-10-13 12:07:40.0051 3592350/T1i Ser/Server.h:691 ]: [ServerThr.24] Updating statistics

[ D 2022-10-13 12:07:40.0051 3592350/T14 Ser/Server.h:691 ]: [ServerThr.17] Updating statistics

[ D 2022-10-13 12:07:40.0052 3592350/T1u Ser/Server.h:691 ]: [ServerThr.30] Updating statistics

[ D 2022-10-13 12:07:40.0052 3592350/Tm Ser/Server.h:691 ]: [ServerThr.8] Updating statistics

[ D 2022-10-13 12:07:40.0052 3592350/T21 Ser/Server.h:691 ]: [ServerThr.33] Updating statistics

[ D 2022-10-13 12:07:40.0053 3592350/T1o Ser/Server.h:691 ]: [ServerThr.27] Updating statistics

[ D 2022-10-13 12:07:40.0055 3592350/Ta Ser/Server.h:691 ]: [ServerThr.2] Updating statistics

[ D 2022-10-13 12:07:40.0055 3592350/Td Ser/Server.h:691 ]: [ServerThr.3] Updating statistics

[ D 2022-10-13 12:07:40.0055 3592350/T8 Ser/Server.h:691 ]: [ServerThr.1] Updating statistics

I've tried changing the PassengerLogLevel in /etc/apache2/mods-enabled/passenger.conf (which default to 5 (debug)) to warning level (2). But no improvements, still receiving tons of these logs...

Any idea on how to deal with this issue ?