- Server operating system version

- Debian GNU/Linux 10 (buster)

- Plesk version and microupdate number

- 18.0.52 Update #3

Hello everyone,

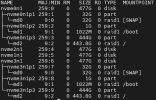

we are planning a major server move so that our domains are finally in a Plesk instance on one server but now we are reaching our limits as far as storage is concerned.

For this we ordered a new hard drive, which I can now integrate - correctly and without data loss, preferably during operation - so far two hard drives were installed in the server as a raid and the mounting point was root.

I've already read through a number of explanations and descriptions - it seems to be possible, everyone does it differently, none of these ways is really simple or harmless.

Has anyone here (please!) ever done something similar and can give me some advice or way that should work.

I'm really grateful for any help, because I've already had experience with Linux administration, but I've never had a memory expansion during operation on a live server with 360 domains - keep your eyes open when looking for a job - I'll take it to heart next time take. But I'm currently in the wrong company and MUST make it somehow.

If further information is needed for assistance, I will be happy to provide it.

With laughing and crying eyes,

Tim

we are planning a major server move so that our domains are finally in a Plesk instance on one server but now we are reaching our limits as far as storage is concerned.

For this we ordered a new hard drive, which I can now integrate - correctly and without data loss, preferably during operation - so far two hard drives were installed in the server as a raid and the mounting point was root.

I've already read through a number of explanations and descriptions - it seems to be possible, everyone does it differently, none of these ways is really simple or harmless.

Has anyone here (please!) ever done something similar and can give me some advice or way that should work.

I'm really grateful for any help, because I've already had experience with Linux administration, but I've never had a memory expansion during operation on a live server with 360 domains - keep your eyes open when looking for a job - I'll take it to heart next time take. But I'm currently in the wrong company and MUST make it somehow.

If further information is needed for assistance, I will be happy to provide it.

With laughing and crying eyes,

Tim