- Server operating system version

- Debian 10.12 x86_64

- Plesk version and microupdate number

- Plesk Obsidian 18.0.43.1

Hi!

Currently I have an issue on only one instance of my Plesk infrastructure.

I've even gone to the extremes of rebuilding the whole setup, Plesk + FTP servers.

Same issues after rebuilds.

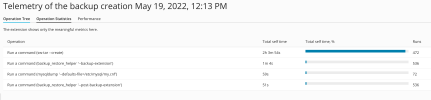

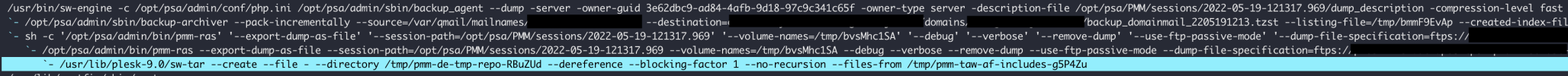

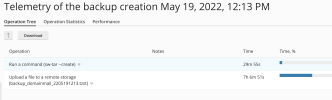

Backups to a remote FTP(S) store takes forever to complete.

A full server backup of 61.9 GB takes 12 hours.

Full or Multi volumes produce the same results.

Server Information:

Memory Usage (with backup/transfer):

CPUs + Load:

Configs:

Only a single entry of scheduled backups in the DB:

Some manual CLI tests for sanity (disclaimer they all perform as expected):

FTP

SFTP

RSYNC

10 Minute transfer speed sample from Plesk server while backup is running

So max throughput achieved is 1.65625 Mbytes per second with the supplied backup setup built into Plesk.

The server also has ample disk space for creation in /var/lib/psa/dumps/ before transfer.

Storage medium is a set of NVME's for the server.

I've tried to be as extensive as possible in testing.

However now I'm slowly approaching the "at a loss" stage.

Any help, feedback or test ideas would be appreciated.

Cheers,

Carl

Currently I have an issue on only one instance of my Plesk infrastructure.

I've even gone to the extremes of rebuilding the whole setup, Plesk + FTP servers.

Same issues after rebuilds.

Backups to a remote FTP(S) store takes forever to complete.

A full server backup of 61.9 GB takes 12 hours.

Full or Multi volumes produce the same results.

Server Information:

plesk -v

Product version: Plesk Obsidian 18.0.43.1

OS version: Debian 10.12 x86_64

Build date: 2022/04/14 18:00

Revision: 1a6b26fb2fd0ac923f3ca10bdfd13b721cb5c676

Memory Usage (with backup/transfer):

free -m

total used free shared buff/cache available

Mem: 8192 1079 6575 107 536 7112

Swap: 0 0 0

CPUs + Load:

cat /proc/cpuinfo | grep processor | wc -l

8

09:56:03 up 1 day, 10 min, 3 users, load average: 3.04, 4.85, 5.06

Configs:

Firewalls disabled on remote FTP/SFTP and Plesk instance.

Currently testing on same private LAN.

Backup Manager -> Remote Storage Settings -> FTP

Use Passive Mode (Y)

Use FTPS (Y)

Backup Settings (all defaults)

Only a single entry of scheduled backups in the DB:

MariaDB [psa]> select count(*) from BackupsScheduled;

+----------+

| count(*) |

+----------+

| 1 |

+----------+

1 row in set (0.000 sec)

Some manual CLI tests for sanity (disclaimer they all perform as expected):

FTP

ftp> put backup_domainmail_2205181657.tzst

local: backup_domainmail_2205181657.tzst remote: backup_domainmail_2205181657.tzst

200 PORT command successful

150 Connecting to port 60227

226-File successfully transferred

226 287.199 seconds (measured here), 20.97 Mbytes per second

6316500889 bytes sent in 287.20 secs (20.9746 MB/s)

SFTP

SFTP:

sftp> put backup_domainmail_2205181657.tzst

Uploading backup_domainmail_2205181657.tzst to /data/backup_domainmail_2205181657.tzst

backup_domainmail_2205181657.tzst 100% 6024MB 73.9MB/s 01:21

RSYNC

backup_domainmail_2205181657.tzst

6,316,500,889 100% 84.27MB/s 0:01:11 (xfr#1, to-chk=0/1)

10 Minute transfer speed sample from Plesk server while backup is running

Sampling eth0 (600 seconds average)..

376333 packets sampled in 600 seconds

Traffic average for eth0

rx 488.95 kbit/s 583 packets/s

tx 13.25 Mbit/s 43 packets/s

So max throughput achieved is 1.65625 Mbytes per second with the supplied backup setup built into Plesk.

The server also has ample disk space for creation in /var/lib/psa/dumps/ before transfer.

Storage medium is a set of NVME's for the server.

I've tried to be as extensive as possible in testing.

However now I'm slowly approaching the "at a loss" stage.

Any help, feedback or test ideas would be appreciated.

Cheers,

Carl