Hello,

I've been struggling with huge (50 to 100+) load average incidents. The server becomes unresponsive and sometimes not even SSH connection is possible. This is how they typically appear in Plesk Advanced Monitoring.

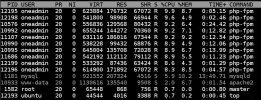

Nothing helpful is found in system logs and, when possible, all I can see is a cumulative number of uninterruptible php-fpm processes of WordPress instances. If I'm lucky and fast enough to log in, restarting apache2 service almost always solves the problem.

The events occur randomly, from 3 times a day to once a week. Sometimes they recover to normal levels from 30 minutes to 5 hours. If not, then only a VPS forceful stop and restart can overcome the issue. Months ago, the problem became quite worse when MySQL remote access had to be allowed.

This is an AWS instance, running updated Ubuntu 18.04 and latest Plesk Obsidian, host of dozens of low-traffic WordPress instances on PHP 7.4 served by Apache and local MySQL. No mail services except msmtp to external server.

External abusive actors are constantly probing WordPress installations for vulnerable code. Fail2ban is set to manage them and usually bans tens of IPs, but there were days where that amount grew to hundreds.

Although kind and attentive, Plesk Support has not been able to identify the cause or provide effective help.

Nothing seems to indicate that the uninterruptible state is due to disk operation. After some research, it is said that they may be caused by pending cURL operations to unresponsive external sources. This way, some change in timeouts of cURL or FPM (like request_terminate_timeout) might solve the problem.

Would anybody agree with the timeout limit suggestion? If so, how to implement them server-wide?

If not, any other thought, help or suggestion is more than welcome!

Thanks!

I've been struggling with huge (50 to 100+) load average incidents. The server becomes unresponsive and sometimes not even SSH connection is possible. This is how they typically appear in Plesk Advanced Monitoring.

Nothing helpful is found in system logs and, when possible, all I can see is a cumulative number of uninterruptible php-fpm processes of WordPress instances. If I'm lucky and fast enough to log in, restarting apache2 service almost always solves the problem.

The events occur randomly, from 3 times a day to once a week. Sometimes they recover to normal levels from 30 minutes to 5 hours. If not, then only a VPS forceful stop and restart can overcome the issue. Months ago, the problem became quite worse when MySQL remote access had to be allowed.

This is an AWS instance, running updated Ubuntu 18.04 and latest Plesk Obsidian, host of dozens of low-traffic WordPress instances on PHP 7.4 served by Apache and local MySQL. No mail services except msmtp to external server.

External abusive actors are constantly probing WordPress installations for vulnerable code. Fail2ban is set to manage them and usually bans tens of IPs, but there were days where that amount grew to hundreds.

Although kind and attentive, Plesk Support has not been able to identify the cause or provide effective help.

Nothing seems to indicate that the uninterruptible state is due to disk operation. After some research, it is said that they may be caused by pending cURL operations to unresponsive external sources. This way, some change in timeouts of cURL or FPM (like request_terminate_timeout) might solve the problem.

Would anybody agree with the timeout limit suggestion? If so, how to implement them server-wide?

If not, any other thought, help or suggestion is more than welcome!

Thanks!