KirkM

Regular Pleskian

Remote FTP backups have been working perfectly until the panel updated to 18.0.30. All backups, domain and full server are throwing error messages:

FULL SERVER:

DOMAINS:

I have only a couple hundred MB used on a 2 TB remote backup drive so it isn't out of space. It doesn't appear to be a connection issue with that drive as the panel does connect and write the backup files. *EDIT: I just also noticed that the file size listed on the remote backup is .1 GB bigger than the file size shown in Plesk panel for the same backup.* The backups are just no good. I am pretty sure 18.0.30 broke something as it also created the error mentioned in the thread Resolved - Checking the consistency of the Plesk database Inconsistency in the table 'smb_roleServicePermissions'

The solution to that issue was resolved using the solution listed in the thread. However, I can't seem to fix the backup issue caused by 18.0.30.

FULL SERVER:

The following error occurred during the scheduled backup process:

Export error: Size of volume backup_2009170035.tar 20805223935 does not match expected one 20806575198. The remote backup may not be restored.; Unable to validate the remote backup. It may not be restored. Error: Failed to exec pmm-ras: Exit code: 119: Import error: Unable to find archive metadata. The archive is not valid Plesk backup or has been created in an unsupported Plesk version

DOMAINS:

Export error: Size of volume backup_domain.com_2009170045.tar 1773085695 does not match expected one 1773713659. The remote backup may not be restored.

Unable to validate the remote backup. It may not be restored. Error: Failed to exec pmm-ras: Exit code: 119: Import error: Unable to find archive metadata. The archive is not valid Plesk backup or has been created in an unsupported Plesk version

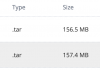

I have only a couple hundred MB used on a 2 TB remote backup drive so it isn't out of space. It doesn't appear to be a connection issue with that drive as the panel does connect and write the backup files. *EDIT: I just also noticed that the file size listed on the remote backup is .1 GB bigger than the file size shown in Plesk panel for the same backup.* The backups are just no good. I am pretty sure 18.0.30 broke something as it also created the error mentioned in the thread Resolved - Checking the consistency of the Plesk database Inconsistency in the table 'smb_roleServicePermissions'

The solution to that issue was resolved using the solution listed in the thread. However, I can't seem to fix the backup issue caused by 18.0.30.

Last edited: