- Server operating system version

- CentOS Linux 7.9.2009 (Core)

- Plesk version and microupdate number

- Plesk Obsidian Version 18.0.49 Update #2

Hi,

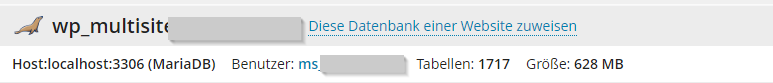

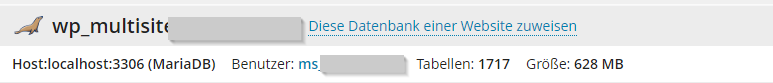

a colleague migrated a wordpress multisite with quite many tables (1717 tables, 628MB) to our central plesk server. Now the backup is failing with this message (warning):

Plesk database tool "check and repair" does not find any problems.

This article (German) says there would be a mysqldump limit not to lock more than 330 tables.

www.sysadminslife.com

Is there any (temporary?) workaround without reducing the number of tables by ~1400?

www.sysadminslife.com

Is there any (temporary?) workaround without reducing the number of tables by ~1400?

Regards

Martin

a colleague migrated a wordpress multisite with quite many tables (1717 tables, 628MB) to our central plesk server. Now the backup is failing with this message (warning):

As said database has currently 1717 tables but does not use much space:Warning: Database "wp_multisite_XYZ"

Unable to make database dump. Error: Failed to exec mysqldump: Exit code: 2: mysqldump: Got error: 1016: "Can't open file: './wp_multisite_XYZ/wp_multi_20_icl_content_status.frm' (errno: 24)" when using LOCK TABLES

Plesk database tool "check and repair" does not find any problems.

This article (German) says there would be a mysqldump limit not to lock more than 330 tables.

MySQLDump – Can’t open file (errno: 24) when using LOCK TABLES - SysADMINsLife

Ich hatte bereits mehrmals den Error:

Regards

Martin